How Does Kubernetes Work? A Comprehensive Guide for OpsNexa

In the modern software development landscape, containerization is at the forefront of application deployment and scaling. As businesses like OpsNexa increasingly embrace cloud-native technologies, understanding how Kubernetes works is crucial to maximizing the potential of containerized applications. Kubernetes provides a powerful platform for automating deployment, scaling, and management of containerized applications, making it an essential tool for organizations seeking to improve operational efficiency.

In this guide, we will break down how Kubernetes works, its core components, and how OpsNexa can leverage Kubernetes to streamline their container management and orchestrate large-scale deployments.

What is Kubernetes?

Before understanding how Kubernetes works, it’s important to know what it is. Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, management, and networking of containerized applications. Kubernetes abstracts away the underlying hardware infrastructure and provides a unified API for managing your applications in the cloud, on-premises, or in hybrid environments.

Kubernetes is designed to work with container runtimes like Docker, containerd, and CRI-O to deploy and manage containerized applications. With Kubernetes, OpsNexa can orchestrate applications across clusters of machines, providing robust scaling, automatic failover, and more.

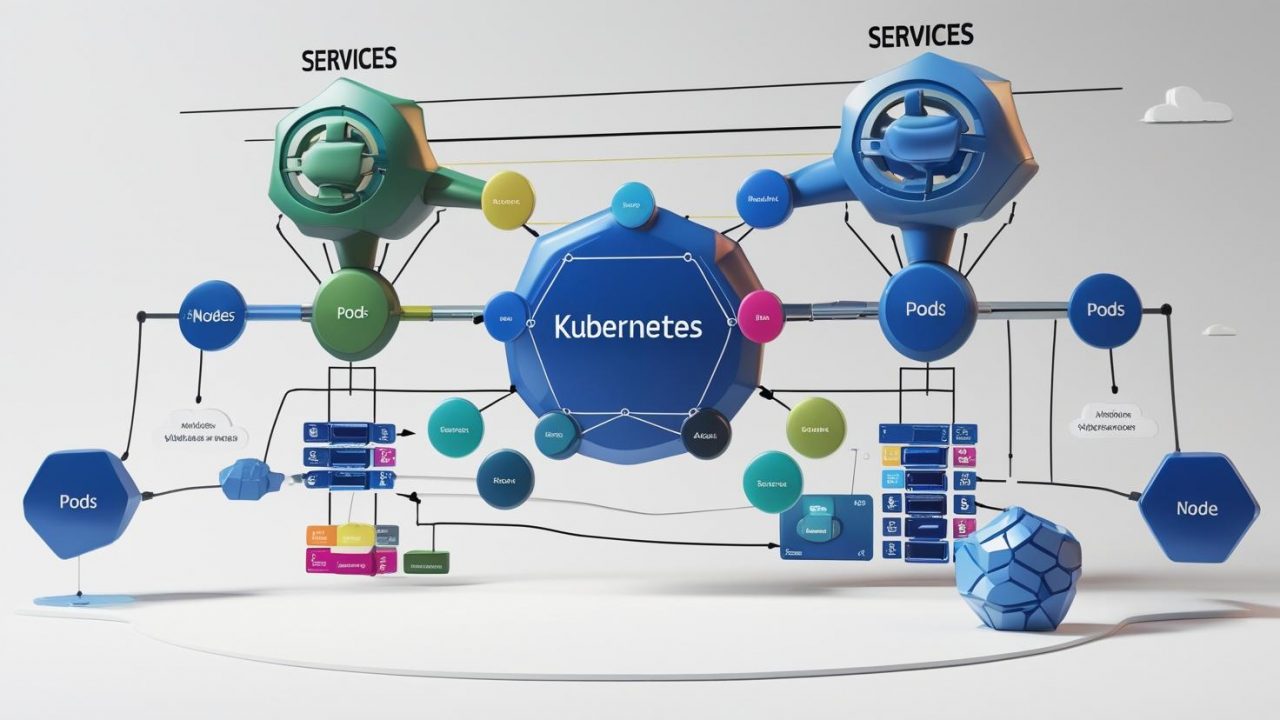

Key Concepts and Components of Kubernetes

Understanding how Kubernetes works begins with grasping its fundamental concepts and architecture. Let’s break down some of the most important components:

1. Cluster

A Kubernetes cluster is a set of physical or virtual machines that run containerized applications managed by Kubernetes. The cluster consists of two main components:

-

Master Node (Control Plane): The control plane manages the Kubernetes cluster and makes decisions about the cluster, such as scheduling and managing workloads. It also handles the overall state of the cluster.

-

Worker Nodes: Worker nodes are where the containers actually run. These nodes host the containers that carry out the work specified by the applications.

For OpsNexa, understanding the structure of a Kubernetes cluster is key to effectively managing the workloads and ensuring the infrastructure is scalable and reliable.

2. Pods

A Pod is the smallest and simplest unit in Kubernetes. It represents a single instance of a running process in your cluster. Pods can host one or more containers and provide a shared context (such as networking and storage) for those containers. Each container within a pod shares the same network namespace and can communicate with one another through localhost.

For example, a microservices-based application might have multiple containers running in a single pod, each container responsible for a different part of the service. Pods are typically ephemeral and can be replaced or rescheduled based on the needs of the application.

3. Deployments

A Deployment is a higher-level abstraction that manages the deployment and scaling of a set of pods. It ensures that the specified number of pod replicas are running at all times, helping to maintain the availability of your application. If a pod crashes or becomes unhealthy, the deployment will automatically replace it with a new one.

For OpsNexa, deployments simplify the management of microservices or applications that need to scale up or down based on demand, ensuring zero downtime during updates or scaling events.

4. Services

A Service in Kubernetes is an abstraction that exposes a set of pods as a network service. Services enable communication between different pods and provide stable IP addresses and DNS names for accessing applications. There are different types of services in Kubernetes, such as:

-

ClusterIP: Exposes the service on a cluster-internal IP address.

-

NodePort: Exposes the service on each node’s IP at a static port.

-

LoadBalancer: Automatically provisions an external load balancer.

For OpsNexa, services are vital for managing the networking between various microservices or containerized components across different pods.

5. Namespaces

A Namespace is a logical partition within a Kubernetes cluster that allows you to group resources together. Kubernetes supports multiple namespaces, making it easier to organize and manage resources, especially in large-scale environments.

Namespaces are ideal for separating environments, such as development, staging, and production, allowing different teams to work in isolated spaces without affecting one another.

6. ConfigMaps and Secrets

Kubernetes also provides tools for managing configuration and sensitive information:

-

ConfigMaps allow you to store configuration data separately from your application code.

-

Secrets are used to store sensitive data such as passwords, tokens, and keys, ensuring that your application secrets are securely managed and not hard-coded into the application.

How Kubernetes Works: The Control Plane and Worker Nodes

Kubernetes architecture is based on two main components—the control plane and worker nodes. These components work together to ensure the desired state of your applications is maintained and that everything runs smoothly. Let’s break down each of these components.

1. The Control Plane

The control plane is responsible for managing the Kubernetes cluster. It contains several key components that ensure the system runs as expected:

-

API Server: The API server serves as the gateway to the cluster, handling incoming requests, validating them, and distributing them to other control plane components. All communication with Kubernetes (from users, external systems, and components) goes through the API server.

-

Scheduler: The scheduler is responsible for deciding which worker node a newly created pod should run on. It takes into account factors like resource availability, workload requirements, and policies defined in the cluster.

-

Controller Manager: The controller manager runs controllers that ensure the desired state of the cluster is maintained. For example, if a pod fails, the controller will create a new one to replace it.

-

etcd: etcd is the distributed key-value store used by Kubernetes to store all cluster data, including configuration information, secrets, and resource states. It ensures that the cluster’s state is consistent and highly available.

2. The Worker Nodes

The worker nodes are where your containers (running in Pods) are executed. Worker nodes contain the following components:

-

Kubelet: The kubelet is an agent that runs on each worker node and ensures the containers are running in the correct pods. It checks the state of the containers and reports back to the API server.

-

Kube Proxy: The kube proxy manages network traffic to services on the worker node. It ensures that the right pods receive the network requests based on the service type and IP addresses.

-

Container Runtime: The container runtime is the software responsible for running containers. Kubernetes supports multiple container runtimes, including Docker, containerd, and others.

How Kubernetes Achieves Desired State and Automation

One of the core principles of Kubernetes is the desired state model. Kubernetes allows you to define the desired state of your applications, such as the number of replicas, resource limits, and container images, in configuration files (often written in YAML or JSON format). Kubernetes then works to ensure that the actual state of the system matches the desired state.

For example, if you define a deployment with three replicas, Kubernetes will continuously monitor the cluster to ensure that three pods are running. If one pod crashes or becomes unresponsive, Kubernetes will automatically create a new one to replace it, ensuring your application always has the desired number of replicas.

Additionally, Kubernetes supports self-healing capabilities, meaning it can automatically detect issues such as pod failures and attempt to correct them without manual intervention.

How OpsNexa Can Leverage Kubernetes

For OpsNexa, leveraging Kubernetes provides several benefits:

-

Scalability: Kubernetes makes it easy to scale applications up or down based on demand. With features like horizontal pod autoscaling, OpsNexa can ensure its applications run efficiently without manual intervention.

-

Fault Tolerance: Kubernetes ensures high availability by automatically replacing failed pods and rescheduling workloads across healthy nodes. This reduces downtime and enhances the reliability of applications.

-

Automation: Kubernetes automates deployment, scaling, and management, freeing OpsNexa’s developers and operations teams to focus on higher-value tasks rather than manual processes.

-

Efficiency: Kubernetes optimizes resource usage by running multiple containers on the same machine and adjusting workloads as necessary.

Conclusion: How Kubernetes Works for OpsNexa

Kubernetes is a powerful container orchestration platform that automates many of the operational tasks associated with deploying and managing containerized applications. By understanding how Kubernetes works—from its architecture to the key components like pods, services, and deployments—OpsNexa can take full advantage of Kubernetes’ capabilities to streamline operations and improve scalability, fault tolerance, and automation.

By using Kubernetes to manage containerized applications, OpsNexa can ensure efficient resource utilization, minimize downtime, and accelerate the delivery of applications. Whether you are deploying applications on-premises or in the cloud, Kubernetes offers the flexibility and scalability required to keep your systems running smoothly.

You can also Contact OpsNexa for Devops architect and devops hiring solutions.