Does Kubernetes Use Docker? Understanding the Relationship for OpsNexa

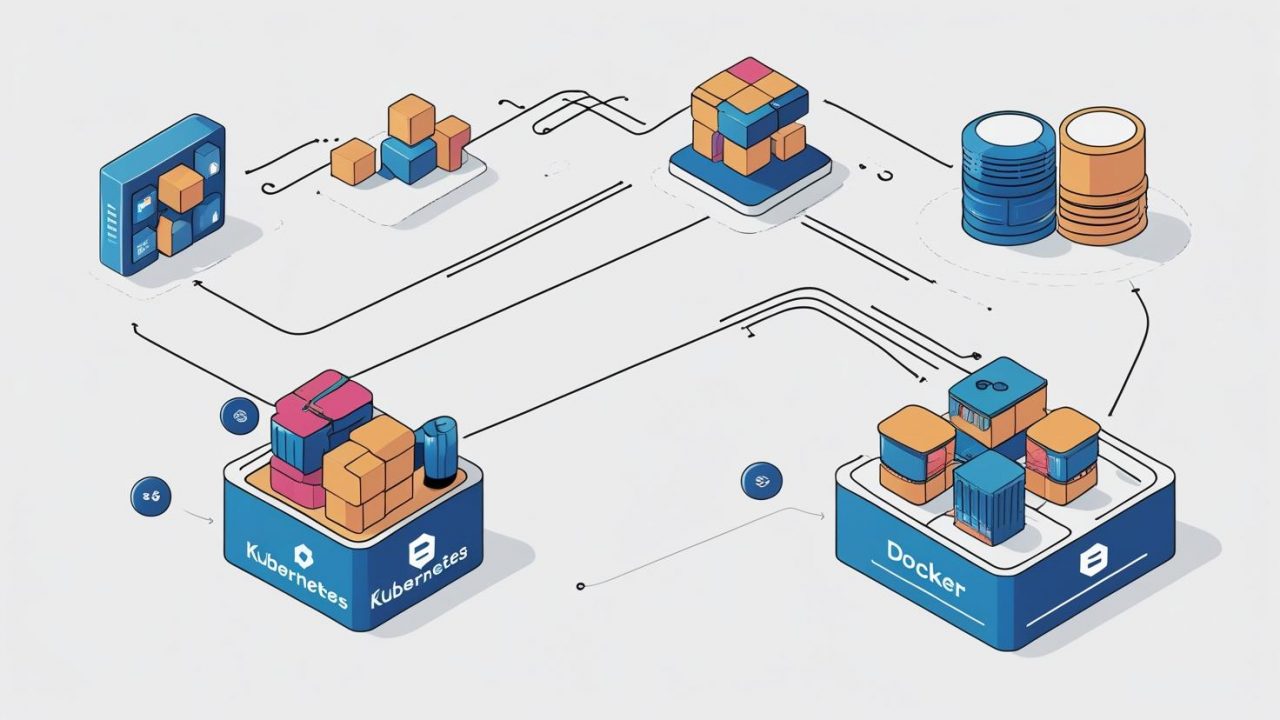

As businesses like OpsNexa adopt Kubernetes for container orchestration, one common question arises: Does Kubernetes use Docker? To fully understand how Kubernetes and Docker work together, it’s important to clarify the relationship between these two technologies and their roles in containerized application management.

In this blog post, we’ll explore whether Kubernetes still uses Docker, how they complement each other, and what this means for businesses like OpsNexa that rely on cloud-native infrastructure.

What is Docker?

Before diving into Kubernetes, let’s first clarify what Docker is.

Docker is a platform for developing, shipping, and running applications inside containers. A container packages an application along with all its dependencies, libraries, and configurations, making it portable and consistent across different environments.

With Docker, developers can:

-

Build container images from Dockerfiles.

-

Run containers on local machines or servers.

-

Share images via Docker Hub or other registries.

-

Scale applications with container orchestration tools like Kubernetes.

Docker simplifies application development and deployment, making it one of the most popular tools in the containerization ecosystem.

What is Kubernetes?

Kubernetes (often referred to as K8s) is an open-source container orchestration platform. It automates the deployment, scaling, and management of containerized applications. Kubernetes doesn’t manage containers directly but instead orchestrates and schedules them across a cluster of machines.

While Docker provides the container engine, Kubernetes handles tasks like:

-

Deploying containers across nodes.

-

Scaling applications up or down based on traffic.

-

Managing container health and ensuring high availability.

In short, Kubernetes manages containers, while Docker creates and runs them.

Does Kubernetes Use Docker?

In the past, Kubernetes used Docker as its default container runtime. Docker would be responsible for creating, managing, and running containers in the Kubernetes cluster. Kubernetes would delegate the task of container lifecycle management to Docker, while orchestrating them across nodes.

However, Kubernetes has evolved, and while Docker remains important, it is no longer the default container runtime. Kubernetes has transitioned to a more flexible approach, allowing the use of multiple container runtimes.

1. Kubernetes and Docker (Historically)

Earlier, Docker was the primary container runtime for Kubernetes. It would pull container images from a registry, run them as containers, and provide an API to interact with those containers. Kubernetes would rely on Docker’s API to manage and schedule containers across the cluster.

2. The Shift to Other Runtimes

Starting with Kubernetes version 1.20, Kubernetes began deprecating Docker as the container runtime. This doesn’t mean Docker is unsupported, but Kubernetes no longer uses Docker’s runtime directly. Instead, Kubernetes now relies on a standard interface called the Container Runtime Interface (CRI) to support multiple container runtimes.

Now, Kubernetes can work with runtimes such as:

-

containerd: A high-performance container runtime that originated from Docker. containerd can run containers independently of Docker and is often used in production environments.

-

CRI-O: A lightweight container runtime specifically designed for Kubernetes. It provides the functionality needed for running containers in Kubernetes without the extra features provided by Docker.

-

Other runtimes: Kubernetes can also use runtimes like runC and gVisor for specific use cases.

These runtimes are compatible with Kubernetes, giving teams more flexibility in choosing the best solution for their needs.

3. What Does This Mean for Kubernetes and Docker Users?

Although Kubernetes no longer uses Docker as its container runtime by default, Docker images are still fully supported in Kubernetes. You can still use Docker to build images and then deploy them on Kubernetes, regardless of the underlying container runtime.

For businesses like OpsNexa, this change is not a huge disruption. Here’s why:

-

Docker images still work: You can continue building Docker images, as they remain the standard for containerized applications. Kubernetes will run these images using whichever container runtime you choose (containerd, CRI-O, etc.).

-

Flexibility in runtime choice: Kubernetes’ support for multiple container runtimes means you can choose the best one for your infrastructure. This gives your team flexibility in terms of performance, security, and compatibility.

-

Container runtime abstraction: Kubernetes abstracts the details of the container runtime, meaning you don’t have to worry about which runtime is being used. You’ll still interact with Kubernetes the same way, and it will handle all the orchestration and scaling tasks.

Why Did Kubernetes Move Away from Docker?

The decision to deprecate Docker as the default container runtime in Kubernetes was driven by several factors:

-

Container Runtime Interface (CRI): The CRI allows Kubernetes to communicate with different container runtimes through a unified interface. This provides flexibility and enables Kubernetes to support runtimes other than Docker.

-

Efficiency and Performance: Docker offers more functionality than Kubernetes needs. For example, Docker manages image building, network configurations, and volume management. Kubernetes, however, only needs to focus on orchestrating containers, so using a lighter-weight runtime like containerd can improve performance and reduce overhead.

-

Modularity: By using runtimes like containerd or CRI-O, Kubernetes becomes more modular. This allows you to use the best runtime for your specific needs, whether that’s performance, security, or scalability.

-

Future-proofing: Kubernetes is designed to be highly adaptable and flexible. Supporting multiple runtimes ensures Kubernetes can keep up with the changing landscape of container technologies and remain vendor-neutral.

What Does This Mean for OpsNexa?

For OpsNexa, the change in Kubernetes’ default container runtime is not likely to cause any disruption. In fact, it can offer several benefits:

-

Docker for image creation: Developers can continue using Docker for building container images. Docker remains one of the most popular tools for creating images, and Kubernetes can deploy those images using the container runtime of choice.

-

Choose the right runtime: If you’re setting up a Kubernetes cluster at OpsNexa, you have the flexibility to choose the container runtime that fits your infrastructure. You may opt for containerd for better performance or CRI-O for a Kubernetes-optimized solution.

-

Simplified management: Kubernetes abstracts the container runtime, meaning you don’t have to worry about which one is being used. Kubernetes handles the orchestration and scaling, ensuring everything runs smoothly, regardless of the underlying runtime.

-

Vendor-neutrality: With Kubernetes supporting multiple container runtimes, OpsNexa can avoid vendor lock-in. This gives you the flexibility to choose cloud providers, tools, and runtimes based on your needs.

Conclusion

To summarize, Kubernetes no longer uses Docker as its default container runtime, but Docker images remain fully compatible with Kubernetes. Kubernetes has moved towards a more flexible model, supporting runtimes like containerd and CRI-O that are more efficient for container orchestration.

For OpsNexa, this change means greater flexibility in choosing the best container runtime for your infrastructure, while continuing to rely on Docker for image creation. Kubernetes abstracts the runtime layer, ensuring a seamless experience regardless of the container runtime used.

By understanding this shift in Kubernetes, OpsNexa can stay ahead of the curve and take advantage of the latest advancements in containerization technology.

You can also Contact OpsNexa for Devops architect and devops hiring solutions.