How to Create a Kubernetes Cluster: A Complete Guide by OpsNexa

As containerized applications become the standard for scalable and resilient deployments, understanding how to create a Kubernetes cluster is essential for any DevOps team. At OpsNexa, we specialize in helping organizations build and manage efficient Kubernetes infrastructures. This in-depth guide walks you through creating Kubernetes clusters using three primary methods: Minikube for local testing, kubeadm for custom deployments, and managed services like GKE, EKS, and AKS.

By the end of this guide, you’ll understand not only how to create a Kubernetes cluster, but also how to tailor it for production-readiness, performance, and security.

What Is a Kubernetes Cluster and Why Does It Matter?

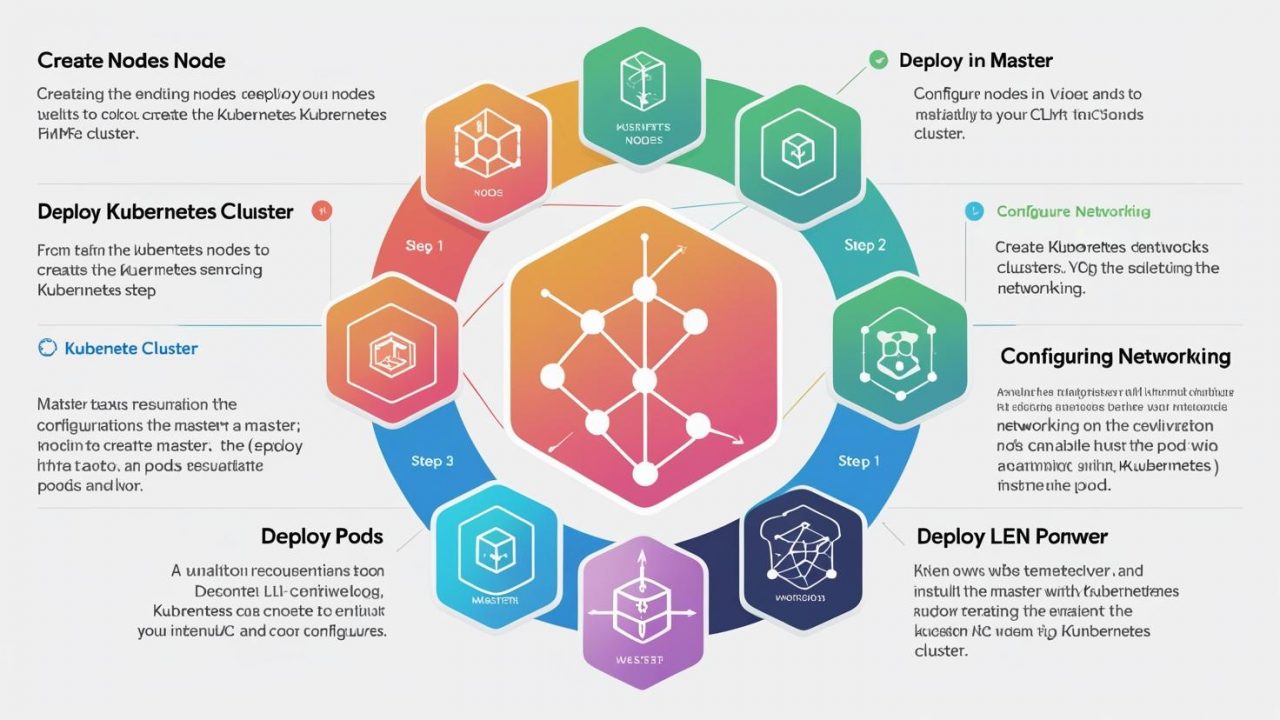

A Kubernetes cluster is a set of nodes (machines) that run containerized applications orchestrated by Kubernetes. The cluster consists of:

-

Control Plane: Manages the cluster state and scheduling.

-

Worker Nodes: Run the containerized applications (Pods).

-

ETCD: A key-value store that saves cluster configuration and state.

When set up properly, a Kubernetes cluster provides:

-

High availability

-

Self-healing capabilities

-

Automated scaling and deployment

-

Centralized configuration management

Creating a cluster is the foundational step in leveraging Kubernetes’ full potential. Whether you’re running a single-node development cluster or a multi-region production setup, the configuration process defines your system’s reliability, security, and scalability.

Prerequisites for Creating a Kubernetes Cluster

Before creating a cluster, make sure the following are in place:

System Requirements:

-

At least 2 CPUs, 2GB RAM (per node)

-

Ubuntu 20.04 / CentOS 8 (or newer)

-

Network access between nodes

-

Swap disabled (

sudo swapoff -a)

Essential Tools:

-

kubectl: Command-line interface to manage Kubernetes -

Dockerorcontainerd: Container runtime -

kubeadm: CLI tool for bootstrapping clusters -

SSH access (for multi-node clusters)

Install kubectl:

Install kubeadm and dependencies:

At OpsNexa, we recommend automating these steps using scripts or infrastructure-as-code tools like Terraform and Ansible for reproducibility.

Method 1 – Creating a Local Kubernetes Cluster with Minikube

Minikube is the easiest way to create a local Kubernetes cluster for learning and development.

Step-by-Step Instructions:

-

Install Minikube:

-

Start the Cluster:

-

Verify Cluster Status:

Minikube supports addons like Ingress, Dashboard, and Metrics Server. Enable them as needed:

Ideal for developers, Minikube replicates a real Kubernetes environment without requiring cloud infrastructure.

Method 2 – Creating a Kubernetes Cluster with kubeadm

For greater control, use kubeadm to bootstrap a custom cluster on your servers or virtual machines.

Step-by-Step Instructions:

-

Initialize the Control Plane Node:

-

Configure

kubectlAccess:

-

Install a Pod Network (Flannel example):

-

Join Worker Nodes:

Run thekubeadm joincommand generated during init on each worker node. -

Verify Nodes:

With kubeadm, you’re responsible for setting up networking, storage, and HA features. OpsNexa recommends this approach for internal or hybrid cloud deployments.

Method 3 – Creating Kubernetes Clusters in the Cloud (GKE, EKS, AKS)

Cloud-managed Kubernetes platforms offer simplicity, security, and scalability out of the box. Here’s how to create a cluster on the top three providers.

Google Kubernetes Engine (GKE):

-

Authenticate with Google Cloud:

-

Create the Cluster:

-

Configure kubectl:

Amazon EKS:

-

Install eksctl:

-

Create the Cluster:

Azure AKS:

-

Login to Azure:

-

Create Resource Group and AKS Cluster:

-

Connect kubectl:

These platforms handle upgrades, auto-scaling, and monitoring for you—ideal for production environments.

Post-Cluster Creation Best Practices (OpsNexa Tips)

Creating a Kubernetes cluster is just the start. Ensuring it runs smoothly requires a few essential post-deployment steps:

1. Install Monitoring and Logging

-

Prometheus + Grafana for metrics

-

Fluentd or Loki for logs

2. Set Up Role-Based Access Control (RBAC)

Limit permissions to least privilege using custom roles and bindings.

3. Use Namespaces

Segment environments (dev, staging, prod) to avoid resource conflicts.

4. Implement Network Policies

Define how pods communicate with each other to improve security.

5. Automate with GitOps

Use tools like ArgoCD or Flux to automatically apply changes from Git repositories.

6. Backups and Disaster Recovery

Regularly back up etcd and have a documented recovery strategy.

At OpsNexa, we build custom Kubernetes automation scripts, Helm charts, and monitoring stacks to accelerate cluster reliability and reduce MTTR (mean time to recovery).

Final Thoughts: Create and Scale Kubernetes Clusters Confidently with OpsNexa

Whether you’re building your first Kubernetes cluster on a laptop or deploying hundreds of microservices across clouds, understanding the different creation methods is key. From local development with Minikube to enterprise-grade setups using kubeadm or managed cloud services, Kubernetes offers the flexibility to scale based on your needs.

At OpsNexa, we help businesses architect, deploy, and manage production-grade Kubernetes clusters. If you need help automating cluster creation, setting up CI/CD pipelines, or enhancing Kubernetes security—reach out to our DevOps experts today.

Contact OpsNexa for Devops architect and devops hiring solutions.