What Are Kubernetes Clusters? A Simple Guide by OpsNxa

In the world of containerized applications, Kubernetes has become the go-to platform for automating deployment, scaling, and management. At the heart of Kubernetes is the Kubernetes cluster, but what exactly does this mean, and how does it benefit your business?

In this post, we’ll explain what a Kubernetes cluster is, its core components, and why it’s crucial for modern application management. We’ll also explore how OpsNexa can assist you in setting up, managing, and optimizing Kubernetes clusters for your needs.

What is a Kubernetes Cluster?

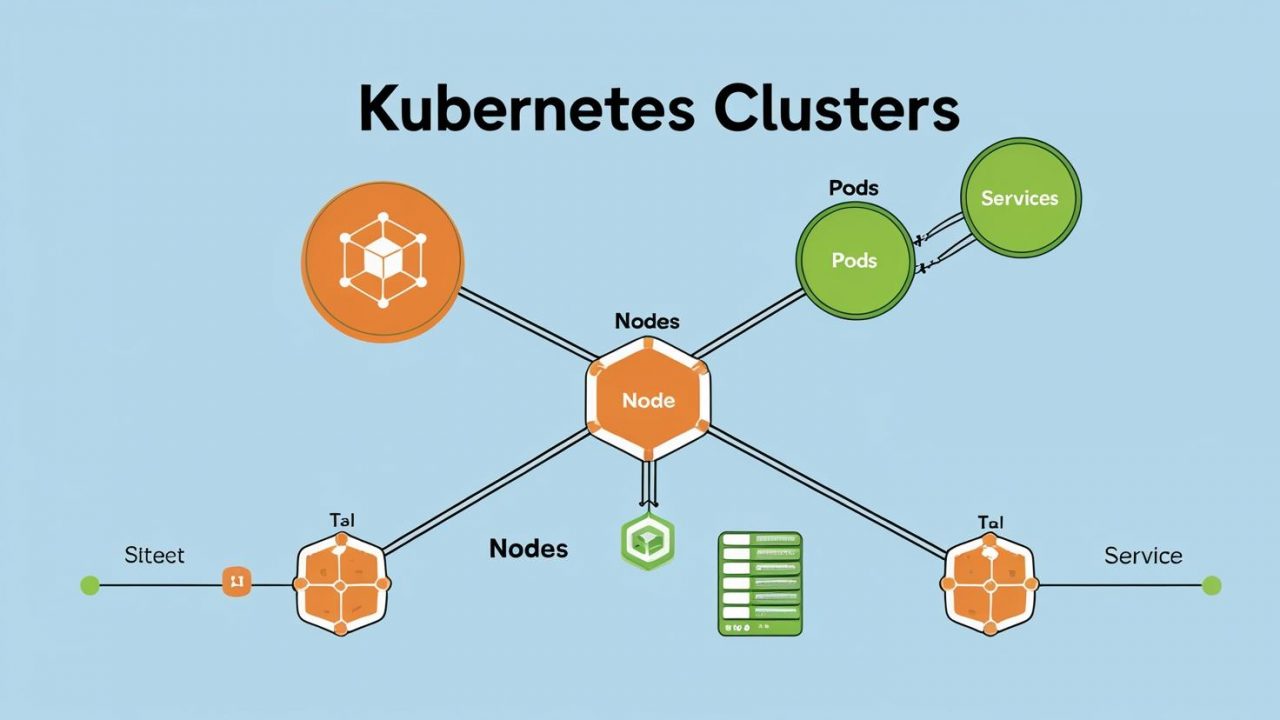

A Kubernetes cluster is a set of interconnected machines (either physical or virtual) that work together to run containerized applications. It forms the foundation for deploying, scaling, and managing these applications in a streamlined, automated way.

Kubernetes clusters are made up of two main parts: the control plane, which manages the cluster, and the worker nodes, where your applications run.

Key Components of a Kubernetes Cluster

-

Control Plane: The control plane is the brain of the Kubernetes cluster. It manages the entire cluster, schedules work, and keeps the system running smoothly. The control plane includes:

-

API Server: The API server acts as the gateway for all interactions with the cluster. It processes requests and communicates with the components that control the cluster.

-

Scheduler: The scheduler decides which node should run the pods (groups of containers) based on available resources and constraints.

-

Controller Manager: This component manages the lifecycle of the cluster, ensuring that the desired state (e.g., the number of pods running) is maintained.

-

etcd: A key-value store that holds all the cluster’s configuration data and metadata, ensuring that the system remains consistent.

-

-

Worker Nodes: These are the physical or virtual machines where your application workloads (pods) run. Worker nodes include:

-

Kubelet: An agent that ensures that containers are running in their respective pods.

-

Container Runtime: The software that runs containers within pods (e.g., Docker, containerd).

-

Kube Proxy: A network proxy that manages communication between pods, services, and external traffic.

-

-

Pods: A pod is the smallest unit of deployment in Kubernetes. It can contain one or more containers that share the same network and storage resources. Pods are scheduled to run on worker nodes.

Why Kubernetes Clusters Matter

Kubernetes clusters are essential for managing containerized applications effectively, especially at scale. Here’s why they’re so important:

1. Scalability

Kubernetes clusters can automatically scale applications to meet demand. If more resources are needed, Kubernetes will add more pods or nodes to handle the load.

2. High Availability

If a pod or node fails, Kubernetes ensures that a new pod is created to replace it, keeping the application running smoothly without downtime.

3. Resource Efficiency

Kubernetes clusters are designed to use resources efficiently. By distributing workloads across nodes, Kubernetes ensures that resources are fully utilized, avoiding underutilization or overloading.

4. Portability

Kubernetes allows applications to run on any platform—whether it’s on-premises, in the cloud, or in a hybrid environment. This flexibility makes Kubernetes ideal for multi-cloud strategies and avoiding vendor lock-in.

How Kubernetes Clusters Work

A Kubernetes cluster operates as a set of interconnected systems working together to manage containers. Here’s how it functions:

-

User Requests: Users interact with the cluster through the Kubernetes API (using tools like

kubectl). Requests to deploy, scale, or manage applications are sent to the API server. -

Workload Scheduling: The scheduler takes the user’s request and determines which worker node should run the pod based on available resources.

-

Running Applications: The kubelet on each worker node ensures that containers within pods are running as expected. It constantly communicates with the control plane to report the status of applications.

-

Service Discovery: Kubernetes automatically provides service discovery, allowing pods to communicate with each other and enabling load balancing. Services expose applications to the outside world, and Kubernetes routes traffic to them as needed.

-

Scaling and Self-Healing: Kubernetes monitors the health of applications and infrastructure. If a pod or node fails, Kubernetes automatically replaces it. The system can also scale workloads based on usage, adding or removing resources as needed.

Types of Kubernetes Clusters

Kubernetes clusters can be deployed in different environments to suit your specific needs:

-

On-Premises Clusters: Some businesses prefer to run Kubernetes on their own hardware within their data center. This provides complete control over the infrastructure but requires more effort to manage.

-

Cloud-based Clusters: Cloud providers like Amazon Web Services (AWS), Google Cloud (GCP), and Microsoft Azure offer managed Kubernetes services (e.g., Amazon EKS, Google GKE, Azure AKS), simplifying cluster setup and management.

-

Hybrid and Multi-cloud Clusters: Kubernetes clusters can span on-premises and cloud environments, providing a flexible and redundant infrastructure. This approach helps businesses avoid being locked into a single cloud provider.

When Should You Use Kubernetes Clusters?

Kubernetes clusters are ideal for specific use cases that require scalability, reliability, and automation. Here are a few situations where Kubernetes clusters make sense:

1. Microservices Architectures

When your application is divided into many smaller services (microservices), Kubernetes clusters help orchestrate them, ensuring they can communicate and scale independently.

2. High-Availability Applications

For mission-critical applications that need to remain available at all times, Kubernetes ensures automatic failover and redundancy, so your application keeps running even if components fail.

3. Cloud-Native Applications

If you’re building cloud-native applications designed to scale and take advantage of cloud environments, Kubernetes is the perfect solution for managing these apps in a distributed, automated way.

4. Multi-Cloud Deployments

When your applications need to run across different cloud providers, Kubernetes clusters help you avoid vendor lock-in and ensure consistent operations across diverse environments.

How OpsNexa Can Help You With Kubernetes Clusters

At OpsNexa, we specialize in helping businesses leverage Kubernetes clusters for their containerized applications. Here’s how we can assist:

1. Kubernetes Cluster Setup

We guide you through the process of setting up Kubernetes clusters in any environment—on-premises, cloud, or hybrid. We ensure the setup is tailored to your needs, focusing on scalability, security, and availability.

2. Cluster Management and Monitoring

Our team takes care of ongoing management and monitoring of your Kubernetes clusters. We handle updates, security patches, and proactive health checks to keep everything running smoothly.

3. CI/CD Integration

We can integrate your Kubernetes clusters with Continuous Integration and Continuous Deployment (CI/CD) pipelines to streamline your software delivery process, automating everything from testing to production deployment.

4. Training and Support

Our experts provide training and support to ensure your team can effectively manage Kubernetes clusters. Whether you need help with basic setup or advanced troubleshooting, we’re here to help.

Conclusion

Kubernetes clusters are a vital part of modern containerized application management, providing automation, scalability, and high availability. They allow businesses to efficiently manage their infrastructure and applications, ensuring they can scale and adapt to growing demands.

At OpsNexa, we help organizations design, deploy, and manage Kubernetes clusters to ensure seamless application orchestration. Whether you need help setting up your first cluster or optimizing your existing one, we are here to assist.

Contact OpsNexa for Devops architect and devops hiring solutions.