What is a Kubernetes Cluster? A Simple Guide for OpsNexa on Container Management

As businesses like OpsNexa embrace modern technologies like containerization and microservices, understanding Kubernetes is key to effectively managing and scaling applications. At the core of Kubernetes lies the Kubernetes Cluster—a powerful system designed to handle containerized applications.

In this guide, we’ll explain what a Kubernetes Cluster is, how it works, and why it’s crucial for OpsNexa to leverage this technology to streamline application deployment, ensure high availability, and support scaling needs.

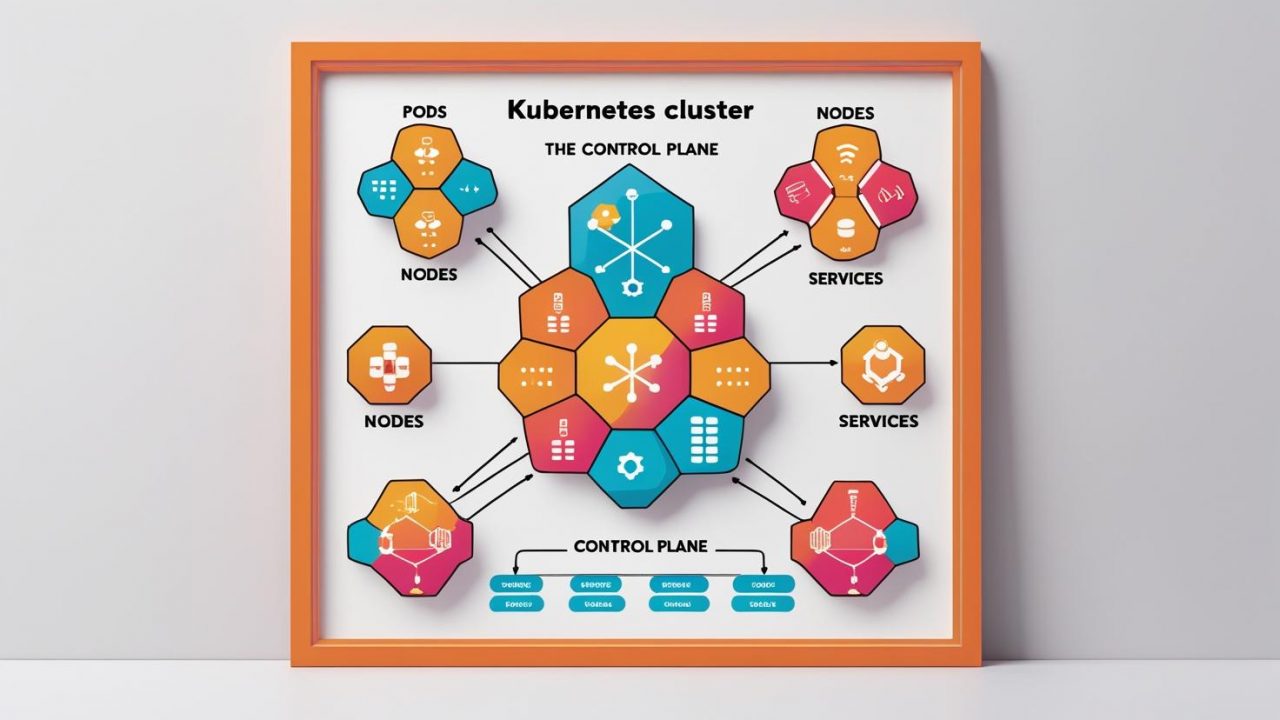

What is a Kubernetes Cluster?

A Kubernetes Cluster is a set of machines (physical or virtual) working together to run containerized applications. It’s the heart of the Kubernetes architecture and ensures that applications are deployed, managed, and scaled efficiently. A Kubernetes Cluster is made up of two main components:

-

Control Plane (Master Node)

-

Worker Nodes

These components work together to ensure everything runs smoothly, from scheduling workloads to managing resources and maintaining the overall health of the cluster.

Let’s take a deeper look at each of these components.

Key Components of a Kubernetes Cluster

1. Control Plane (Master Node)

The Control Plane, or master node, is the brain of the Kubernetes Cluster. It manages the cluster’s overall state and ensures that applications are running as expected. The Control Plane includes several critical components:

-

Kubernetes API Server: This is the interface through which users, developers, and internal components interact with the cluster. All operations in the cluster (such as deploying applications) happen through the API Server.

-

Controller Manager: The controller manager monitors the state of the cluster and ensures that the desired state (e.g., running Pods, scaling applications) matches reality.

-

Scheduler: The scheduler decides which worker node will run a given Pod based on available resources.

-

etcd (Cluster Data Store): etcd is a key-value store that holds all of the cluster’s configuration data, ensuring consistency across the system.

For OpsNexa, the Control Plane is responsible for making sure everything in the cluster is running smoothly and that tasks like application deployment and scaling happen automatically.

2. Worker Nodes (Node Pool)

The worker nodes are the machines that actually run your applications in the Kubernetes Cluster. Each node contains the components necessary to run containers and manage their lifecycle, including:

-

Kubelet: The kubelet is the agent running on each worker node. It makes sure that containers are running and healthy within the node.

-

Kube Proxy: The kube proxy handles network traffic to and from the containers running on the node, ensuring load balancing and service discovery.

-

Container Runtime: This is the software that actually runs the containers. Common runtimes include Docker, containerd, and CRI-O.

For OpsNexa, the worker nodes are where your containerized applications actually live and run. When you scale your application, Kubernetes will use these nodes to deploy new instances.

3. Pods

In Kubernetes, a Pod is the smallest deployable unit and can run one or more containers. Pods are the building blocks for Kubernetes workloads. They are scheduled to run on the worker nodes, and they share the same network and storage resources.

For OpsNexa, Pods are where you’ll deploy your applications. Each Pod can run multiple containers that are tightly coupled (such as a web server and its sidecar logging container).

4. Services

A Service is an abstraction layer that provides a stable endpoint for accessing your Pods. It helps with load balancing and ensures that your application can be accessed even as Pods are added or removed. Services make it easier for clients to find and connect to the right Pods.

For OpsNexa, Kubernetes Services provide consistent access to your applications, even as Pods are scaled up or down.

5. Namespaces

Namespaces help organize your resources within the Kubernetes Cluster. They allow you to divide the cluster into different logical units, so multiple teams or applications can share the same cluster without interfering with each other’s resources. Each namespace can have its own set of resources.

For OpsNexa, namespaces are useful for managing different environments, such as development, staging, and production, within the same Kubernetes Cluster.

Why Should OpsNexa Use a Kubernetes Cluster?

A Kubernetes Cluster is essential for managing large-scale containerized applications. Here are some key reasons why OpsNexa should consider using Kubernetes to manage its containerized applications:

1. High Availability and Fault Tolerance

Kubernetes ensures that your applications remain available even if some nodes or Pods fail. If a Pod fails, Kubernetes will automatically reschedule it on another node. This built-in redundancy is crucial for maintaining application uptime.

For OpsNexa, this means your applications can continue running without interruptions, even if hardware or software failures occur.

2. Scalability

Kubernetes makes it easy to scale applications up or down based on demand. It can automatically scale the number of Pods running based on factors like traffic or resource utilization.

For OpsNexa, Kubernetes can handle changes in demand without requiring manual intervention, ensuring that your applications always have the resources they need.

3. Resource Management and Optimization

Kubernetes allows you to efficiently allocate resources like CPU and memory to different Pods. It uses the scheduler to place Pods on nodes with the right available resources, optimizing resource usage across the cluster.

For OpsNexa, this means more efficient use of infrastructure, as Kubernetes ensures that resources are used where they’re most needed.

4. Simplified Deployment and Management

With Kubernetes, deploying and updating applications becomes much easier. Kubernetes can automate tasks like rolling updates, application rollbacks, and scaling. This reduces the need for manual intervention, making the deployment process more efficient and reliable.

For OpsNexa, this means faster, safer deployments and less downtime during updates.

5. Service Discovery and Load Balancing

Kubernetes has built-in load balancing and service discovery, which ensures that traffic is evenly distributed across the available Pods. This is especially useful when scaling applications to handle high traffic volumes.

For OpsNexa, Kubernetes ensures that your applications can handle increased traffic and that users can always access them without disruption.

6. Security and Isolation

Kubernetes provides role-based access control (RBAC), which helps you control who can access different resources within the cluster. Additionally, namespaces provide isolation for different applications or environments.

For OpsNexa, this means that sensitive resources can be protected, and different teams can work independently within their own namespaces without worrying about interference.

How to Set Up a Kubernetes Cluster for OpsNexa

Setting up a Kubernetes Cluster can be complex, but there are several tools and platforms available to make the process easier:

-

Managed Kubernetes Services: Platforms like Amazon EKS, Google GKE, and Azure AKS offer fully managed Kubernetes clusters. These services handle the control plane, updates, and scaling for you, making setup and management easier.

-

Self-Managed Clusters: If you prefer to manage the cluster yourself, tools like kubeadm or Rancher can help you set up and manage your Kubernetes Cluster on your own infrastructure.

-

Minikube: For local development, Minikube lets you run a single-node Kubernetes Cluster on your local machine, which is useful for testing and development.

Once the cluster is set up, you can use kubectl (the Kubernetes command-line tool) to deploy applications and manage your cluster.

Conclusion: Kubernetes Clusters for Efficient Application Management

A Kubernetes Cluster is essential for managing and scaling containerized applications in today’s cloud-native world. For OpsNexa, leveraging a Kubernetes Cluster can improve application availability, scalability, and resource efficiency. With Kubernetes, you can automate deployments, ensure high availability, and easily scale your applications as needed.

By understanding the components of a Kubernetes Cluster and how to set it up, OpsNexa can streamline its infrastructure, reduce manual overhead, and take full advantage of modern container orchestration techniques. Whether you’re running a simple application or a complex microservices architecture, Kubernetes provides the tools you need for efficient management and scaling.

You can also Contact OpsNexa for Devops architect and devops hiring solutions.