How to Use Kubernetes: A Step-by-Step Guide for OpsNexa

In today’s world of containerized applications and microservices, Kubernetes has become the de facto standard for managing and orchestrating containers. Whether you’re running applications on cloud environments, on-premises infrastructure, or in hybrid cloud setups, Kubernetes provides an efficient way to deploy, scale, and manage your applications seamlessly.

For businesses like OpsNexa, understanding how to use Kubernetes effectively can significantly improve deployment speed, application reliability, and scalability. In this guide, we will walk you through how to use Kubernetes for managing your containerized applications, from installation to management and scaling.

What is Kubernetes?

Before diving into how to use Kubernetes, let’s briefly understand what Kubernetes is. Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Originally developed by Google, Kubernetes is now maintained by the Cloud Native Computing Foundation (CNCF) and has become an industry standard for container orchestration.

With Kubernetes, you can manage containers at scale, monitor their health, and automate various aspects of the container lifecycle, such as scaling, rolling updates, and self-healing.

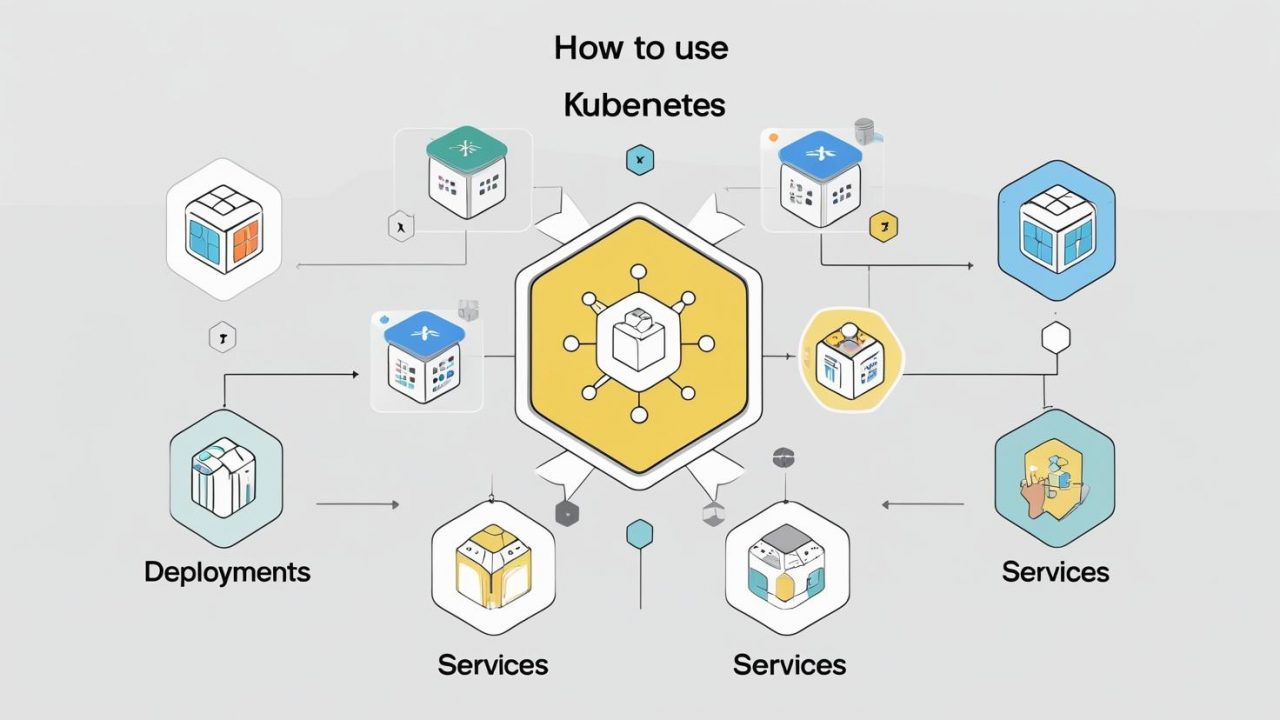

Key Concepts of Kubernetes

To use Kubernetes effectively, you need to understand some core concepts and components:

-

Cluster: A Kubernetes cluster is made up of one or more master nodes and multiple worker nodes. The master node is responsible for managing the cluster, while the worker nodes run the containerized applications.

-

Pod: A pod is the smallest and simplest Kubernetes object. It represents a single instance of a running application in your cluster. A pod can hold one or more containers that share the same network and storage resources.

-

Deployment: A deployment in Kubernetes defines how to deploy a containerized application. It manages the desired state for your application, ensuring that the correct number of replicas are running and that updates are applied seamlessly.

-

Service: A service is an abstraction that defines a logical set of pods and enables network access to them. Services provide stable IP addresses and DNS names, making it easier to communicate between different components of your application.

-

Namespace: Namespaces are used to divide a Kubernetes cluster into multiple virtual clusters. This is useful when you need to isolate resources for different environments (e.g., development, testing, production).

-

ConfigMap and Secret: These Kubernetes objects allow you to store and manage configuration data and sensitive information, such as passwords and tokens, separately from your application code.

How to Use Kubernetes: A Practical Guide

Now that we’ve introduced some key concepts, let’s walk through how to use Kubernetes effectively for managing your applications.

1. Setting Up Kubernetes

Before you start using Kubernetes, you need to have a cluster set up. There are several ways to do this, such as:

-

Minikube: This is a lightweight tool for running Kubernetes locally on your machine. It’s perfect for development and testing.

-

Cloud-managed services: Platforms like AWS EKS, Google GKE, and Azure AKS provide managed Kubernetes services, where the cloud provider handles much of the cluster management for you.

-

kubeadm: If you’re setting up a cluster on your own infrastructure, you can use kubeadm, a tool for easily setting up and managing Kubernetes clusters.

If you’re already running a Kubernetes cluster (e.g., through OpsNexa’s infrastructure or on the cloud), you can skip the installation steps and go straight into using Kubernetes.

2. Interacting with Kubernetes Using kubectl

Once your cluster is set up, you can interact with Kubernetes using the kubectl command-line tool. kubectl allows you to communicate with the Kubernetes API server to deploy and manage your applications.

Here are some essential kubectl commands:

-

Check the cluster status:

-

Get all resources in the cluster:

-

Get details about nodes:

-

Create a new resource (e.g., a deployment):

-

Apply changes to a resource:

-

Delete a resource:

-

Get logs for a specific pod:

-

Access a running pod:

3. Deploying Applications on Kubernetes

The most common task in Kubernetes is deploying applications. Here’s how to deploy a containerized application:

-

Define your deployment: You’ll need to create a deployment.yaml file, which defines the desired state of your application. For example, a simple Nginx deployment might look like this:

This deployment file instructs Kubernetes to run two replicas of an Nginx container and expose port 80.

-

Apply the deployment:

After applying this, Kubernetes will deploy the Nginx container and ensure that two replicas are running at all times.

-

Verify the deployment:

You should see two Nginx pods running in the cluster.

-

Expose the deployment via a service:

To make the application accessible, create a Service:This will create a load balancer service that maps the external port to the port on which your Nginx container is running.

-

Check the service status:

The service will provide an external IP or DNS address to access the application.

4. Scaling Applications

One of the most powerful features of Kubernetes is its ability to scale applications easily. If your application needs to handle more traffic, you can increase the number of replicas running.

To scale a deployment, you can use the following command:

This will scale your Nginx deployment to five replicas. Kubernetes will automatically handle the creation of new pods to meet the desired state.

To verify the scaling:

You should see five Nginx pods running.

5. Updating Applications

Kubernetes allows for rolling updates, which means you can update your application without downtime. When you update your deployment, Kubernetes will automatically replace old pods with new ones in a controlled manner.

To update the container image in a deployment:

Kubernetes will handle the update process, ensuring that traffic is routed to the new pods without affecting availability.

6. Monitoring and Troubleshooting

Kubernetes also offers built-in monitoring and logging features to help you troubleshoot issues:

-

Get the logs of a pod:

-

Access a pod for debugging:

-

View the status of all resources:

These tools allow you to quickly identify and resolve issues in your Kubernetes environment.

Best Practices for Using Kubernetes at OpsNexa

-

Use Helm: Helm is a package manager for Kubernetes that simplifies the process of deploying complex applications. You can use Helm to install, upgrade, and manage Kubernetes applications with ease.

-

Leverage Namespaces: Use Kubernetes namespaces to isolate environments and manage resources better, especially if your organization handles multiple applications or microservices.

-

Implement RBAC (Role-Based Access Control): Kubernetes supports fine-grained access control through RBAC, which is essential for securing your clusters.

-

Automate CI/CD: Integrate Kubernetes with your CI/CD pipeline to automate deployments, updates, and rollbacks. This can help ensure that your software delivery process is efficient and reliable.

Conclusion

Kubernetes is an incredibly powerful tool that can transform the way businesses like OpsNexa manage and deploy their applications. By understanding the basics of Kubernetes and how to use its features effectively, you can streamline your development, improve scalability, and ensure the reliability of your applications.

Whether you’re deploying applications locally with Minikube, managing resources in the cloud with Kubernetes services like EKS or AKS, or using tools like Helm to simplify complex deployments, Kubernetes offers a wealth of features to help you manage your containerized workloads at scale.