What is a Kubernetes Deployment? A Comprehensive Guide by OpsNexa

In the world of container orchestration, Kubernetes has become the industry standard for managing containerized applications at scale. Kubernetes allows organizations to deploy, manage, and scale their applications efficiently. One of the core concepts in Kubernetes is the Deployment, a key abstraction that enables automated management of applications.

But what exactly is a Kubernetes Deployment? How does it work, and why is it essential for running applications in Kubernetes? In this blog, we will dive into the details of Kubernetes Deployments, explore their functionality, and provide you with insights into how OpsNexa can help you effectively manage your Kubernetes workloads.

What is a Kubernetes Deployment?

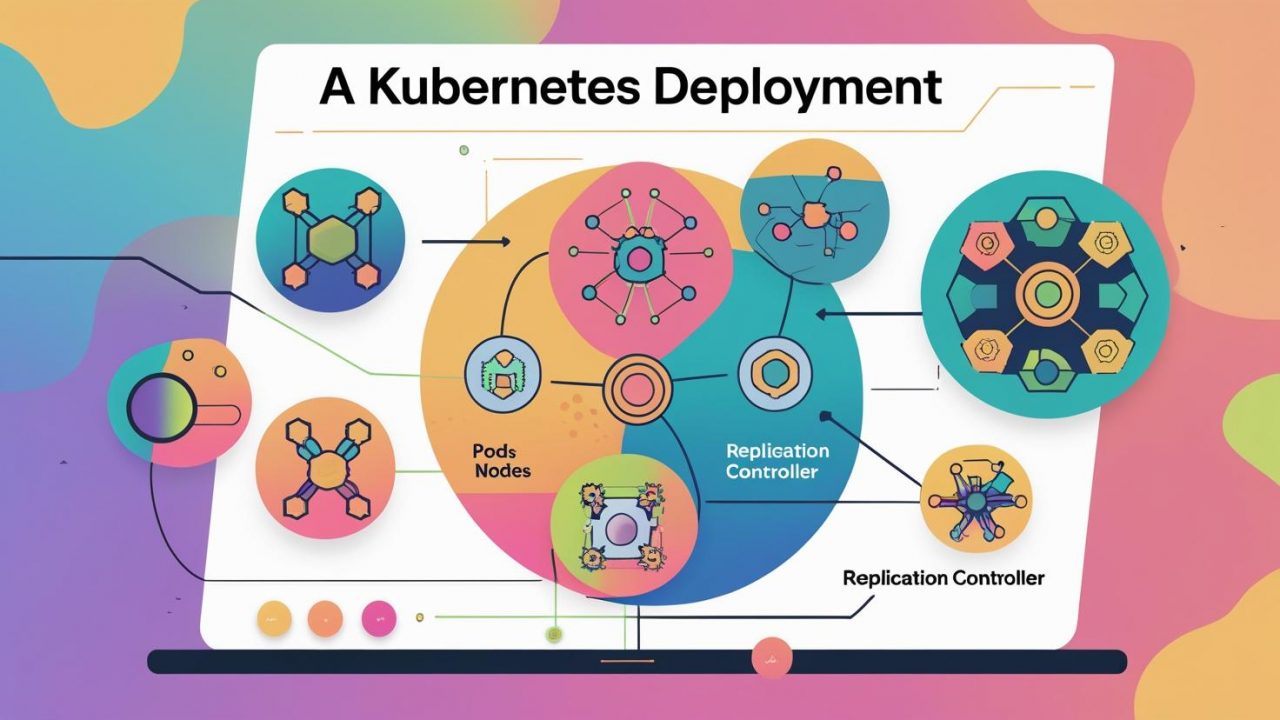

A Kubernetes Deployment is a higher-level object in Kubernetes that manages the lifecycle of applications. It is primarily used to declare and manage the desired state of an application, ensuring that the correct number of replicas (pods) are running, updated, and available at all times.

In simpler terms, a Kubernetes Deployment helps you deploy and maintain your application in a Kubernetes cluster, and it automates tasks like scaling, updating, and rollback.

Key Features of a Kubernetes Deployment:

-

Declarative Updates: A Kubernetes Deployment allows you to specify the desired state of your application, such as the number of replicas or the container image version, and Kubernetes will automatically ensure that the current state matches your desired state.

-

Rolling Updates: One of the most powerful features of a Deployment is its ability to perform rolling updates. This means that you can update your application without downtime, with Kubernetes gradually replacing old pods with new ones to ensure continuous availability.

-

Scaling: Kubernetes Deployments make it easy to scale applications by adjusting the number of replicas. Kubernetes will automatically ensure the correct number of pod replicas are running, whether you’re scaling up or down.

-

Self-Healing: If a pod fails or becomes unresponsive, Kubernetes will automatically replace it to maintain the desired number of replicas. This ensures that your application stays available even if individual pods fail.

How Does a Kubernetes Deployment Work?

A Kubernetes Deployment typically consists of the following components:

1. Pod Template

The pod template defines the pod that the Deployment manages. It specifies the container images, ports, volume mounts, environment variables, and other resources required for the application. When you create a Deployment, Kubernetes will create pods based on the template, ensuring that each pod runs a container with the correct configuration.

2. ReplicaSet

The ReplicaSet ensures that the specified number of pod replicas are running at all times. When a Deployment is created or updated, Kubernetes automatically creates or updates the associated ReplicaSet. The ReplicaSet tracks the number of available pods and ensures that the desired count is maintained. If a pod crashes, the ReplicaSet will create a new one to replace it.

3. Rolling Update Strategy

When you update a Deployment (e.g., changing the container image), Kubernetes will perform a rolling update to gradually update the pods. This means that Kubernetes will replace old pods with new ones in a controlled manner, ensuring that your application remains available during the update process.

4. Rollback

If something goes wrong with the update or deployment, Kubernetes provides the ability to rollback to the previous version. This is especially useful when a deployment introduces issues that affect the availability or functionality of your application.

5. Labels and Selectors

A Kubernetes Deployment uses labels to identify the pods it manages. Labels are key-value pairs that are attached to the pods and other resources, and selectors are used by the Deployment to find and manage the pods with specific labels.

Creating a Kubernetes Deployment

Creating a Kubernetes Deployment involves defining a YAML or JSON manifest that specifies the desired state of your application. Here’s a simple example of a Kubernetes Deployment YAML file:

Explanation of the YAML:

-

apiVersion: The API version being used (in this case,

apps/v1for Deployments). -

kind: Specifies the type of object (in this case, a

Deployment). -

metadata: Contains information about the Deployment, including its name and labels.

-

spec: The specification that defines the Deployment’s behavior.

-

replicas: The number of pod replicas to maintain.

-

selector: Defines how the Deployment will find the pods it manages (based on labels).

-

template: Defines the pod template, which includes the container image and port to expose.

-

When you apply this YAML file with kubectl apply -f deployment.yaml, Kubernetes will create a Deployment with three replicas of the my-app-container, each running the my-app:1.0 container image.

Benefits of Using a Kubernetes Deployment

1. Declarative Configuration

With a Kubernetes Deployment, you declare the desired state of your application (e.g., the number of replicas or the container version), and Kubernetes ensures that the actual state matches the desired state. This declarative approach simplifies application management, as you don’t need to worry about manually handling the state of each individual pod.

2. Automated Scaling

Kubernetes Deployments allow you to easily scale your application. By adjusting the number of replicas in the Deployment spec, Kubernetes will automatically create or remove pods to meet your desired scale. This can be done manually or automatically with the help of Horizontal Pod Autoscalers (HPA).

3. Zero-Downtime Updates

When performing a rolling update, Kubernetes ensures that your application remains available during the update process. It will gradually replace old pods with new ones, preventing downtime and ensuring that your users continue to access the application seamlessly.

4. Self-Healing and High Availability

Kubernetes ensures that your application remains highly available. If a pod fails or crashes, the Deployment automatically creates a new pod to replace it. This self-healing feature helps maintain application uptime and minimizes the impact of failures.

5. Simplified Rollbacks

If an update causes issues, you can easily rollback to a previous version of the application with a simple command. Kubernetes stores a history of Deployments, allowing you to roll back to a known working state if needed.

When to Use a Kubernetes Deployment?

Kubernetes Deployments are best suited for applications that require:

-

High availability: You need to ensure that multiple replicas of your application are always running.

-

Rolling updates: You want to update your application without downtime.

-

Scaling: You need to scale your application up or down based on demand.

-

Self-healing: You want Kubernetes to automatically replace failed pods to maintain the desired application state.

Common Use Cases for Kubernetes Deployments:

-

Microservices: Deploying microservices in a Kubernetes cluster, ensuring that each service has multiple replicas for fault tolerance.

-

Web Applications: Running scalable, high-availability web applications.

-

API Services: Managing APIs with rolling updates and zero-downtime deployments.

How OpsNexa Can Help You with Kubernetes Deployments

At OpsNexa, we specialize in Kubernetes management and support. Whether you are new to Kubernetes or looking to optimize your deployments, we can help you:

-

Design and implement Kubernetes deployments tailored to your applications.

-

Automate deployment workflows with CI/CD pipelines for efficient and reliable updates.

-

Scale applications based on demand with Horizontal Pod Autoscalers (HPA).

-

Ensure high availability with automated pod management and self-healing mechanisms.

-

Optimize resources and reduce costs by effectively managing your deployments.

- Contact OpsNexa for Devops architect and devops hiring solutions.